The Role of AI in Transforming Content Moderation

Sep 27, 2024

As online platforms grow and users generate massive amounts of content, moderating that content becomes increasingly crucial. Ensuring compliance with community standards and legal regulations is essential, but manual moderation struggles to keep up. That’s where AI-powered moderation steps in, revolutionizing the process for businesses.The Limitations of Traditional Content Moderation

Traditionally, content moderation has relied heavily on human moderators. These teams, while diligent, often face severe challenges:

Volume: Millions of posts, comments, and images are uploaded every second.

Speed: By the time inappropriate or harmful content is flagged and removed, it may have already caused damage.

Bias: Human moderators bring their own experiences and perspectives, which can inadvertently skew decision-making.

The stakes are high. One wrong decision can lead to public backlash, regulatory fines, or worse—erode user trust. This makes it clear that the future of moderation needs more than just human intervention—it requires the precision and scalability that AI offers.

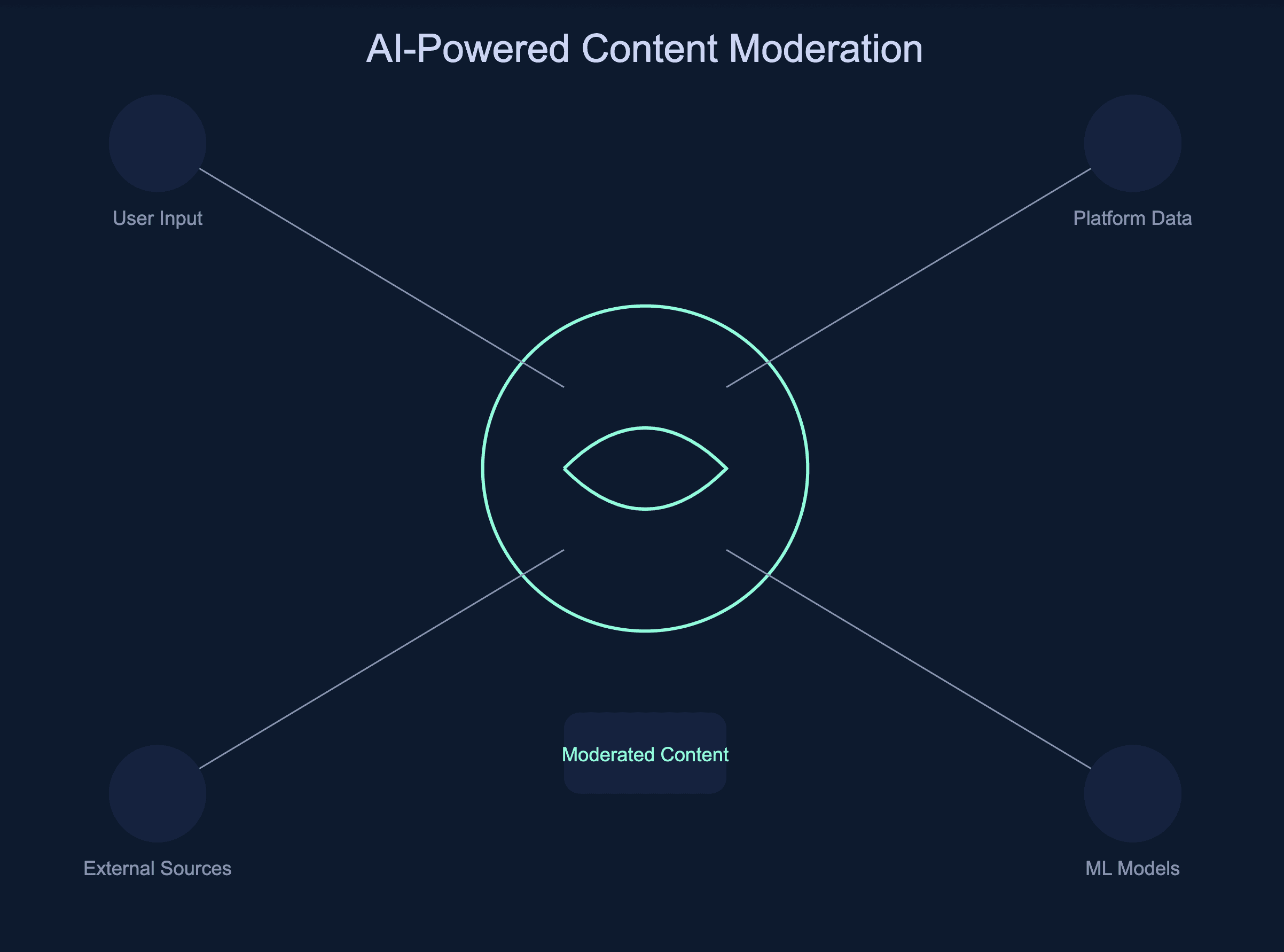

AI-Powered Content Moderation: A Game-Changer

AI brings efficiency, speed, and consistency to content moderation in ways human moderators simply can’t match. Oversai’s approach to AI content moderation focuses on combining machine learning algorithms with natural language processing (NLP) to automatically detect, flag, and even remove inappropriate content in real time.

Here’s how AI transforms the content moderation landscape:

1. Real-Time Scalability

AI-powered moderation systems operate 24/7, processing vast amounts of data at lightning speed. By deploying these models, platforms can moderate millions of pieces of content every minute, ensuring that inappropriate material is dealt with immediately.

2. Advanced Contextual Understanding

One of the biggest challenges in content moderation is context. A phrase that’s harmless in one culture might be offensive in another. AI, especially models powered by NLP, can be trained to understand nuanced language, cultural references, and even sarcasm, making moderation more accurate and less prone to misunderstandings.

3. Reducing Human Bias

AI models, when properly trained, can apply moderation standards consistently across all types of content. They aren’t influenced by fatigue, personal beliefs, or external factors. This reduces the risk of biased decisions and ensures that the platform’s rules are enforced fairly across the board.

4. Improved Decision-Making with Human-AI Collaboration

While AI excels in speed and consistency, the human touch remains invaluable for complex cases. At Oversai, we advocate for a hybrid model where AI takes on the heavy lifting of initial content screening, flagging questionable content for human moderators to review. This collaboration enhances accuracy and allows human moderators to focus on more intricate cases, improving the overall quality of decisions.

5. Adaptability and Continuous Learning

AI systems, especially those leveraging machine learning, continuously improve. As they process more data and receive feedback from human moderators, their ability to make accurate decisions sharpens. This adaptability ensures that platforms can evolve their content moderation policies over time, keeping up with emerging trends, new slang, or even malicious techniques used by bad actors.

The Challenges of AI in Content Moderation

However, AI-powered content moderation is not without its challenges:

False Positives: AI may sometimes flag harmless content as inappropriate. For example, artwork or medical discussions may be misclassified.

Training Data: The accuracy of AI depends on the quality and diversity of the training data. If not carefully curated, biases in the training set can bleed into the AI’s decisions.

Complexity: Moderation often requires understanding not just words but also the context, tone, and user intent—things that can still elude even the most advanced AI systems.

At Oversai, we recognize these challenges. That’s why we invest in robust training methodologies and prioritize human oversight to ensure our models evolve responsibly and align with our commitment to fairness.

The Future of AI in Content Moderation

AI’s role in content moderation is only just beginning. We see a future where AI can not only detect harmful content but also predict when users may be at risk of encountering distressing material. Platforms could create safer, more engaging communities by proactively stepping in when necessary.

At Oversai, we’re committed to pushing the boundaries of what’s possible with AI and content moderation. By combining cutting-edge technology with human oversight, we’re building solutions that prioritize safety, fairness, and efficiency—setting a new standard for digital platforms worldwide.

Want to learn more? Stay tuned for updates from Oversai Perspectives, where we dive deep into AI innovations transforming industries.